AI Authorship and Political Fundraising

2023-05-28

Originally posted on LinkedIn

As a follow-up to some thoughts about the ethical responsibility to declare when political campaign communications are crafted by generative AI, I realized the pressing need to define exactly what "AI-written" means.

Establishing whether a piece of content is "AI-written" or "human-written" isn't inherently straightforward due to the continuum of AI involvement in content creation. However, a potential guiding principle could be rooted in the understanding of "authorship."

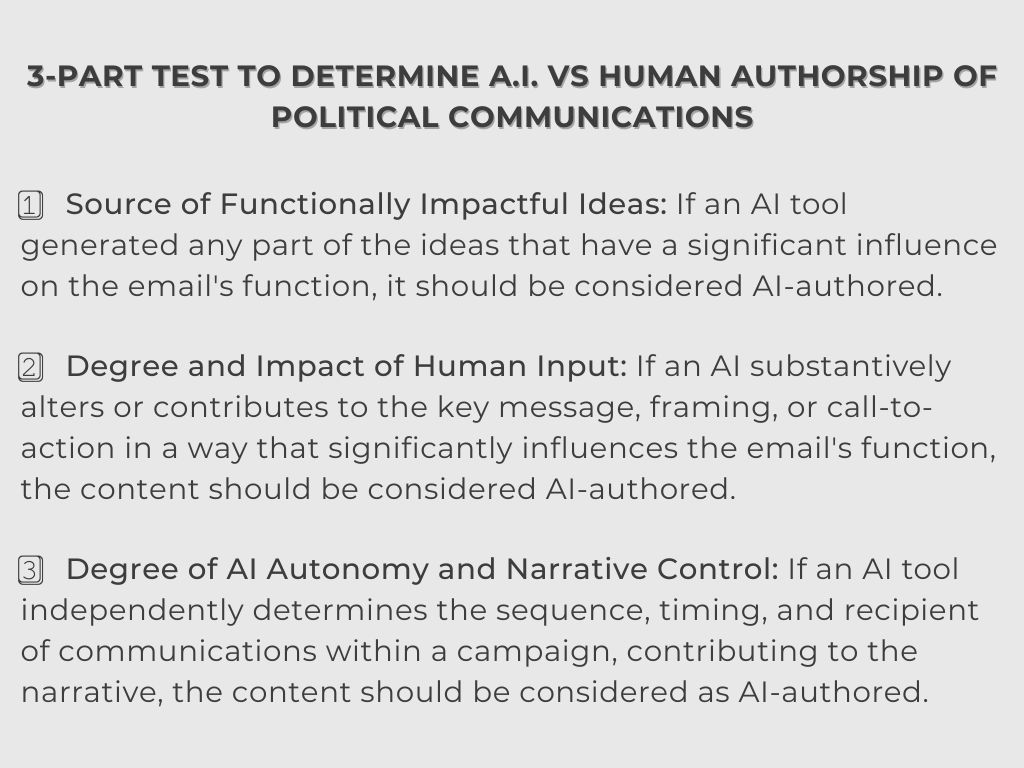

To determine if a political campaign message should be classified as human-authored, I propose a 3-part test:

Test 1: Source of Functionally Impactful Ideas This test evaluates the origin of the core content or ideas. If an AI tool primarily generated the ideas that drive the function of the message, the content should be considered AI-authored. On the other hand, if an AI tool was merely instrumental in refining or enhancing a human's original ideas without contributing new functionally impactful ideas, the content passes this test.

Test 2: Degree and Impact of Human Input This test evaluates the degree and impact of human input in crafting the key message, framing, or call-to-action. If a human substantively alters or contributes to these crucial elements, the content passes this test. Conversely, if an AI substantively alters or contributes to these crucial elements, the content should be considered AI-authored.

Test 3: Degree of AI Autonomy and Narrative Control This test assesses the degree of AI autonomy, especially regarding content distribution. In the context of a political fundraising campaign, each piece of communication - be it an email, text, or ad - forms a part of a larger narrative, like chapters in a book. Together, these chapters construct the complete campaign narrative, each contributing to the overall message. If an AI tool independently determines the sequence, timing, and recipient of these communications, it effectively contributes to shaping the narrative. Therefore, if the AI tool operates without substantial human influence in these decisions, the content should be considered AI-authored. If a human is making these key decisions, even based on AI-provided data, it passes the test.

This approach isn't flawless, but it gives us a place to start. The test isn't binary but rather a measure of the degree of AI's involvement. In my view, this can help maintain ethical standards in political fundraising and potentially more broadly by clearly delineating AI-authored and human-authored content.

I welcome any and all thoughts on this.